Every word in clinical documentation matters, and so does who said it. In any patient encounter, who said something is just as important as what was said. Did the patient report the symptom, or did the caregiver? Did the physician recommend the medication, or did the patient ask for it?

Many generic ambient AI scribes operate by recording audio and converting it into a single, continuous block of text. They then rely on the Large Language Model (LLM) to infer who is speaking based solely on the context of the words. If a voice says "I have a headache," the AI assumes it is the patient. If a voice asks "How long have you had it?" the AI assumes it is the provider.

This contextual guessing creates potential clinical risk and degrades the ability for AI to craft high quality clinical documentation. In the era of Google and ChatGPT, patients frequently use sophisticated medical terminology. If a patient recounts a previous specialist's recommendation by saying "We decided to increase the dosage to 50 milligrams," a generic AI scribe might misattribute this statement to the current physician. This converts a piece of medical history into an incorrect active treatment plan. Without distinct speaker identification, this historical report can become a documentation error that could affect treatment decisions, insurance claims, or legal proceedings.

Correcting these misattributions requires the clinician to scour the entire large block of unattributed text. This turns what should be a quick review into a detailed editing session. If an error is missed, it risks becoming part of the legal medical record.

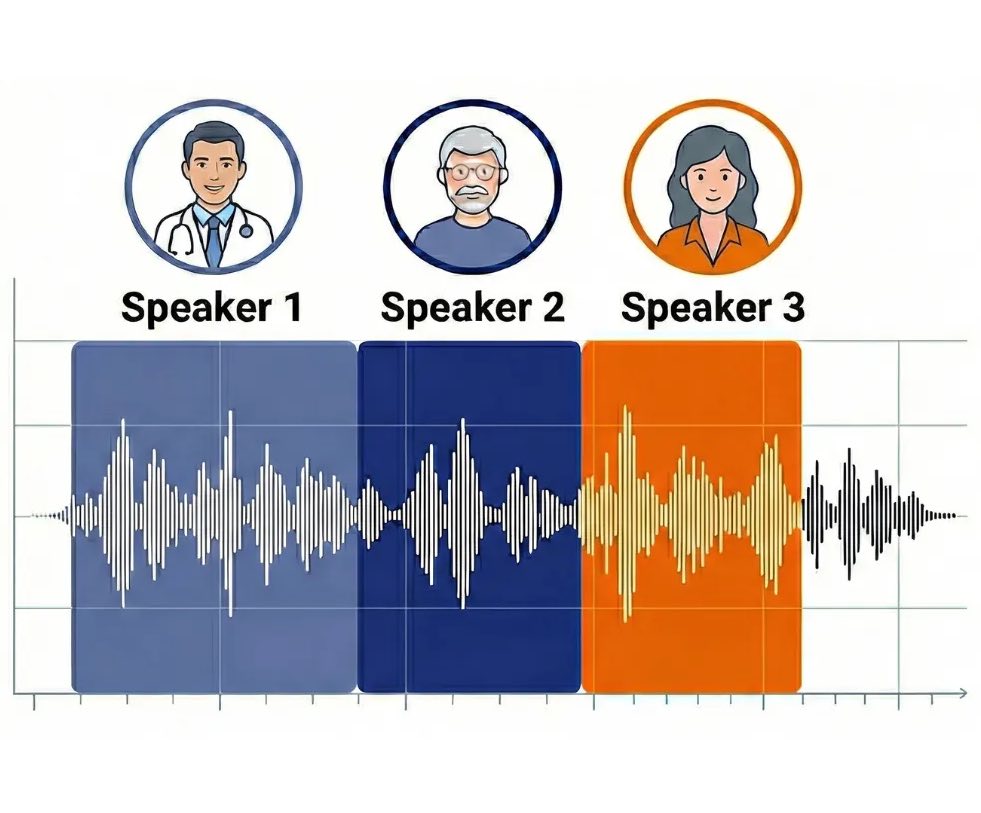

How It Works: Acoustic Fingerprinting and Diarization

To understand the BastionGPT difference, it helps to look at the technology beneath the surface. Most automated note generation platforms treat the transcript as a flat document. They feed this text into the AI model and ask it to add labels like "Doctor" and "Patient" after the fact. This method often struggles when the conversation becomes complex, rapid-fire, involves multiple speakers, or has interruptions.

The BastionGPT Approach

BastionGPT's AI Scribe is designed to avoid relying on the LLM to guess the speaker based on text alone. Instead, the platform utilizes specialized speaker identification technology driven by these core components:

Acoustic Fingerprinting & Speaker Embeddings

The system creates a unique digital ID, or speaker embedding, for each participant’s voice. By analyzing the distinct acoustic fingerprint of each speaker, BastionGPT can track "Speaker 1" versus "Speaker 2" throughout the session. .

Voice Activity Detection (VAD)

Before identifying who is speaking, the system must identify that someone is speaking. Advanced VAD technology filters out non-speech audio such as the hum of HVAC systems, typing sounds, or background noise. This is especially valuable for in-person sessions where ambient recording picks up environmental sounds that would otherwise confuse speaker detection.

Speaker Diarization

This is the processing engine that combines VAD and acoustic fingerprints to map "who spoke when." Diarization partitions the audio stream into distinct segments so that the transcript accurately reflects the back-and-forth flow of conversation even when speakers interrupt one another or speak in short bursts.

Ambient Optimization

The identification tool is optimized for ambient recordings where devices may be stationary while speakers move around the room. This helps maintain accuracy even when audio volume varies between the clinician near the microphone and a patient across the room.

Speed vs. Accuracy

Quality takes time. Some generic AI scribes prioritize instant output. They stream text to the screen as fast as possible by skipping complex audio processing. BastionGPT prioritizes clinical accuracy. Our system takes the time to map speaker embeddings and filter background noise based on clues from the full length of the session. This processing may add time to the generation process compared to basic tools, but it results in a safer, more reliable medical transcript. The transcripts can take between 5 and 60 minutes to be generated but you can process multiple sessions at once without requiring the prior file to complete before you start a new session.

Clinical Use Case: Solving the "Cocktail Party Problem"

In audiology, the difficulty of focusing on a single voice in a noisy or crowded room is known as the "Cocktail Party Problem." In healthcare, this manifests in complex clinical environments where multiple voices contribute to the care plan. BastionGPT’s diarization engine is built to handle these multi-source dialogues effectively.

Family and Couples Therapy (Triad Encounters)

Appointments in pediatrics, geriatrics, and couples therapy rarely happen in isolation. They involve third parties such as parents, caregivers, or partners whose input must be distinguished from the patient’s. A generic AI scribe might attribute a symptom description to the patient when it was actually the caregiver reporting it.

- BastionGPT distinguishes between the provider, the patient, and the collateral source, providing a solution for the documentation to reflect who provided the history. This supports higher accuracy in family therapy notes and geriatric assessments.

Group Therapy

Mental health workflows often require summarizing sessions with multiple participants, such as group therapy. In a group setting, a flat transcript is difficult to use for tracking individual progress because contextual guessing often fails when multiple patients discuss similar themes.

- BastionGPT supports multi-speaker recognition for up to four distinct speakers on standard plans and up to 12 on Enterprise plans. This allows facilitators to generate summaries that better attribute insights to specific individuals rather than the group as a whole.

Clinical Rounds

Complex hospital workflows involve interdisciplinary clinical rounds where multiple providers discuss a patient's care. Attributing input to the specific specialist (e.g., the pharmacist vs. the physician) is critical for the care plan to reflect the specific expertise of each team member.

- Voice recognition capabilities help create care plans that more accurately reflect the contributions of each team member, streamlining care coordination and supporting accountability.

Psychosocial and Learning Assessments

Lengthy evaluations in educational and clinical psychology often integrate data from multiple sources including students, teachers, and parents. Accurate speaker attribution is essential to allow observations to be assigned to the correct source, which is critical for school assessments and learning disability evaluations.

- Distinctly separating the clinician's observations from the parent’s developmental history, helps to prevent the diagnostic error of conflating sources (e.g., attributing a clinician's report of "sensory overwhelm" to the parent) making the generation of the legal assessment more accurate when reflecting on the source of every observation.

Speech-Language Pathology (SLP) Sessions

In therapy sessions where a clinician models a sound or phrase and the client repeats it, attribution is critical for measuring success. A standard transcript often merges the two voices, making it impossible to distinguish the prompt from the performance. You cannot measure accuracy if the record does not reflect who pronounced the word correctly.

- BastionGPT uses diarization to separate the therapist’s cue (e.g., "Say 'Rabbit'") from the client's attempt (e.g., "Wabbit"), which supports more accurate progress tracking within the Subjective and Objective data fields.

Palliative Care and Family Goals of Care

Sensitive end-of-life discussions often involve a provider, a social worker, a patient, and multiple distinct family members deciding on a course of action. Documenting consensus is vital; if a daughter agrees to hospice but a son objects, a generic note stating "Family agreed to hospice" is legally dangerous and clinically inaccurate.

- BastionGPT helps distinguish between specific family members, allowing the note to reflect disparate opinions (e.g., Speaker 3 expressed concern about pain, while Speaker 4 asked about feeding tubes), helping the medical record reflect the true complexity of the decision.

Independent Medical Exams (IMEs) and Workers' Comp

Independent Medical Exams (IMEs) and workers’ compensation evaluations depend on clear, structured documentation. Providers need an easy way to capture what was asked, what was answered, and how to translate that into an accurate report.

- BastionGPT helps by generating organized notes that help distinguish the examiner’s questions from the claimant’s responses. This reduces ambiguity during report writing and makes it easier to draft summaries, narratives, and findings.

The Efficiency Argument: Editing vs. Reviewing

The primary goal of an AI scribe is to save time. However, the hidden cost of low-quality AI is the time spent editing.

Clinicians must always review the entire generated note to ensure accuracy. However, when a provider receives a note where speaker attribution is scrambled, the cognitive load of reviewing that note increases. The clinician must remember the conversation to verify who said what. This effectively re-does the work the AI was intended to handle. If the AI attributes the patient's refusal of medications to the doctor, the clinician has to delete and rewrite that section to avoid a documentation error.

BastionGPT’s approach minimizes these issues. When speaker labels are more accurate from the start, the review process becomes faster and less prone to error. You are simply verifying clinical nuance and not having to heavily edit a low quality AI guess at who said what. This difference reduces the risk that a misattributed statement slips into the permanent record.

Furthermore, clean data inputs lead to cleaner data outputs. Whether you need a SOAP note, clinical assessment, or to capture the details of a group therapy session, more accurate speaker embeddings help ensure that the "Subjective" section actually contains the patient's report, and the "Plan" section contains the provider's instructions. This structural integrity is valuable for automated coding and billing compliance.

What This Means for Clinicians

Accurate speaker attribution transforms the review process. Instead of scanning an entire transcript for misattributed statements, clinicians can have more trust that the doctor's recommendations appear under the doctor's label and patient-reported symptoms stay with the patient. This reduces review time and lowers the risk of documentation errors reaching the legal medical record.

Conclusion: Beyond Convenience

In the world of healthcare AI, accuracy is a priority. As models continue to advance, the ability to generate text will become a commodity. The true differentiator for clinical tools will be the ability to structure and attribute that data correctly within a HIPAA compliant AI framework.

BastionGPT provides access to the top models, including GPT-5, GPT-4.1, GPT-4o, o3, Claude, and Gemini 3 Pro. Our investment in true multi-speaker recognition and VAD technology reflects a commitment to clinical integrity using these powerful tools. By addressing the "wall of text" problem, BastionGPT provides a solution designed to be robust enough for the complexities of real-world medicine. Whether managing a busy school assessment, a group therapy session, or a complex geriatric consult, providers need an AI partner that is built to understand who is speaking.

BastionGPT's approach to speaker recognition reflects a broader design principle: clinical AI should reduce work, not create more of it. By handling speaker identification via voice prints rather than asking an AI model to guess, the platform delivers transcripts that clinicians can review efficiently and have more trust in the output of the content they generate.

If you are interested, most organizations are able to sign up and begin using BastionGPT within 10 minutes, with no setup costs, 7-day trial and no fixed commitments.

Begin your AI journey with BastionGPT today by starting your trial here: https://bastiongpt.com/plus

If you have more questions or would like to connect – you can reach out at:

- Email: [email protected]

- Phone: (214) 444-8445

- Schedule a Chat: Book a Meeting

Frequently Asked Questions (FAQ)

What is the difference between an AI scribe and standard transcription?

Standard transcription converts speech to text word-for-word. An AI scribe goes further by analyzing that text to generate drafts of structured clinical documentation, such as SOAP notes or progress reports, and extracting key medical data. BastionGPT combines both, offering more accurate transcription with advanced automated note generation.

Is ChatGPT HIPAA compliant for creating patient notes?

The standard version of ChatGPT is not HIPAA compliant because your data is often used to train the AI models and it does not include a Business Associate Agreement (BAA). BastionGPT provides a HIPAA compliant ChatGPT solution by accessing these models through a secure, private environment where data is never used for training, data is never given to OpenAI, and a BAA is included with every subscription. Click here to learn more.

Does BastionGPT support multi-speaker recognition?

Yes. Unlike many generic AI scribes that rely on context to guess speakers, BastionGPT utilizes specialized speaker identification (diarization) technology designed to distinguish up to four speakers on standard plans and up to 12 speakers on Enterprise plans. This supports accurate attribution for complex encounters like family therapy or clinical rounds.

Can BastionGPT generate different note formats like SOAP, DAP, or BIRP?

Yes. BastionGPT is highly flexible and can be prompted to generate notes in any preferred clinical format, including SOAP, DAP, BIRP, and allows for custom prompting to create documentation from your transcriptions.

Which AI models does BastionGPT use?

BastionGPT integrates the most advanced and capable AI models available, including OpenAI (GPT-5, GPT-4.1, GPT-4o, o3), Claude, and Gemini 3 Pro. The platform automatically routes tasks to the best model for the job or allows users to select their preferred model within a secure, HIPAA compliant infrastructure.

How does BastionGPT handle data privacy?

BastionGPT is designed exclusively for healthcare professionals, so all plans include data security features that meet or exceed healthcare compliance regulations like HIPAA. Your data is never shared with third-party AI providers (like OpenAI or Google) for training or marketing purposes. BastionGPT automatically includes a BAA for all of our customers to support full compliance with HIPAA, PIPEDA, and APP regulations.

Why do Zoom or Teams transcripts know who is speaking, but AI scribes often struggle with this?

Platforms like Zoom, Google Meet, and Microsoft Teams can label speakers accurately because each participant is connected through a distinct device and user account. The system knows which audio stream belongs to which person before transcription even begins. In clinical settings, however, participants commonly speak into the same microphone in the same room. This makes speaker identification much harder and forces AI scribes to rely on audio patterns instead of device-based identity.

.png)

.png)