Medical professionals spend hours each day drafting creating clinical documentation. With BastionGPT, entire meeting transcripts and large patient histories can be processed in seconds and converted into drafts of various documents, such as SOAP notes, treatment plans, summaries for patients, reports to insurance, and many more. Try our BastionGPT today to discover how hundreds of medical professionals are shaving hours out of their day by leveraging the power of BastionGPT.

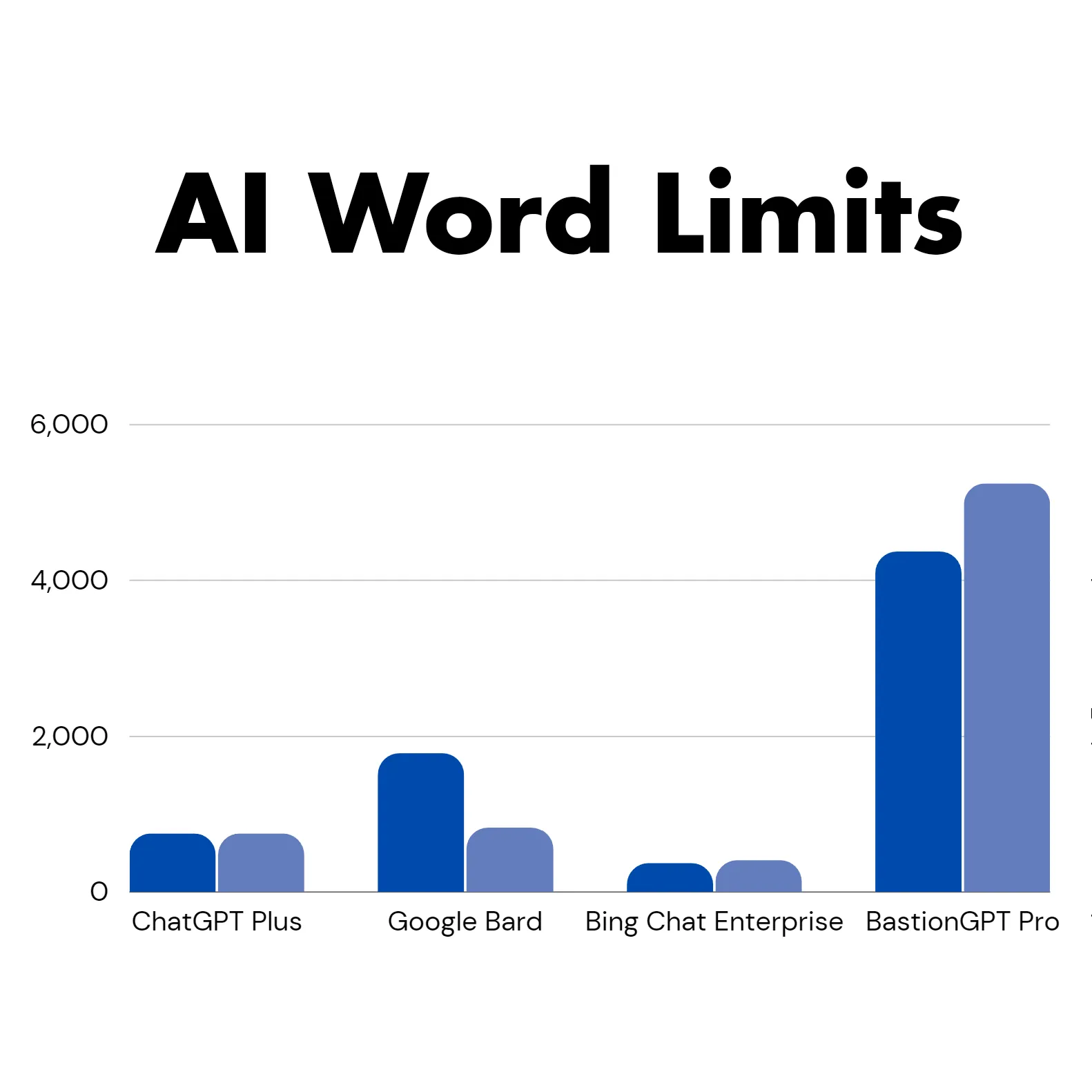

Understanding the limitations of AI assistants and AI scribes can be confusing unless you are an AI expert. It requires understanding AI terms like “context lengths” and “tokens”. To help healthcare professionals understand different AI limitations, without taking a course on artificial intelligence, we have made some generalizations and conversions of data.

What are Tokens in Simple Terms?

Different AI models are able to handle different amounts of data by using units called tokens. A "token" usually refers to a chunk of text that the AI reads as one unit. Think of it as a piece of a puzzle. The puzzle, in this case, is a sentence or a paragraph that you want the AI to understand and process. This chunk could be as small as a single letter or as long as a word.

For example, in the sentence "Dr. Smith treats patients," each word and punctuation mark counts as a token: "Dr", ".", "Smith", "treats", "patients".

Real-World Token and Word Data for Healthcare

We studied real-world healthcare chats to give you a sense of how tokens and words typically measure up in medical settings. Here are the averages we found:

- 5.5 characters per word

- 3.6 characters per token

- 1.5 tokens per word

This data was compiled on September 20, 2023. Please note that these figures could change, and we'll do our best to update this information if that happens.

.png)

.png)